|

Posted on:

28 Feb 2014

|

In our last blog post we discussed about fundamental time scale assumptions and the implication these assumptions can have on the final model. This month we discuss types of assumptions that have less effect on the structure and contents of the model, but that can certainly lead to (computational) problems if they are not recognized.

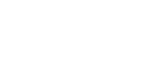

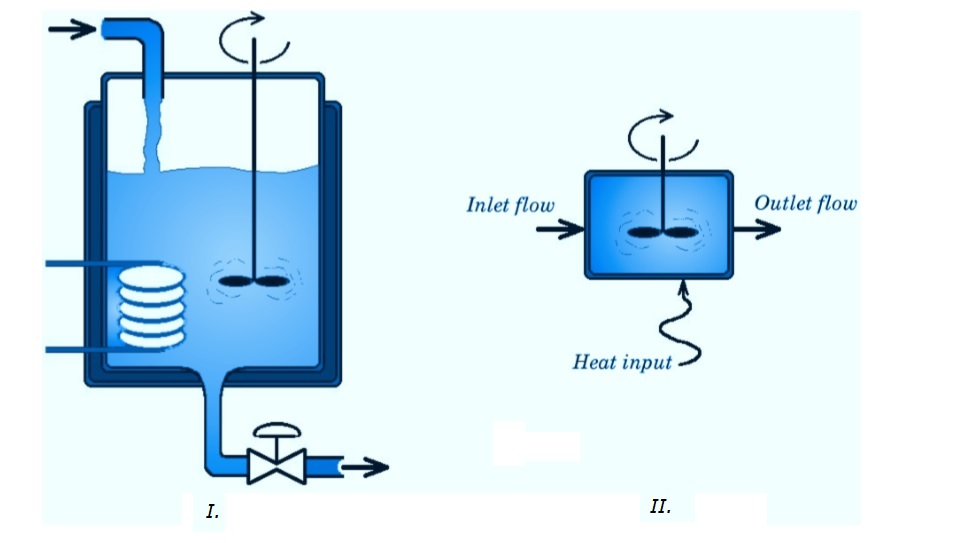

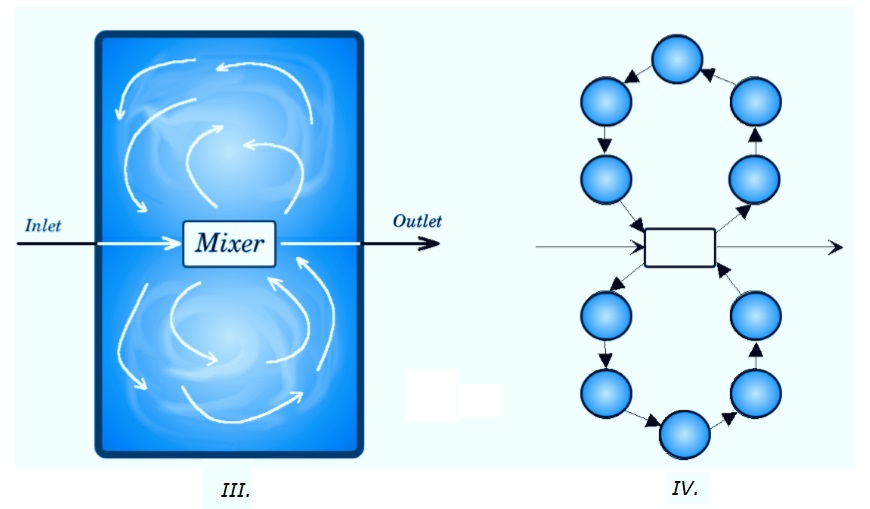

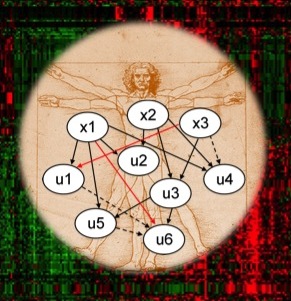

Constraints and assumptions describe all kinds of algebraic relationships between process quantities which have to hold at any time. A volume constraint, for example, restricts the volume of a simple system or the sum of volumes of a set of systems. Assumptions may result in models which are cumbersome to solve. Potential problems can very often be avoided at an early stage of the model development by keeping clear of certain assumptions or by directly dealing with the cause of the potential problems.

As discussed in the previous blog post one can distinguish between several types of assumptions: Structural assumptions (i.e. the construction of the physical topology), order of magnitude assumptions (very small versus very large) and assumptions on relative time scale (very slow versus very fast). These assumptions are usually introduced with a goal, namely to simplify the description of the behaviour by neglecting what is considered insignificant in the view of the application one has in mind for the model. Whilst indeed such assumptions do simplify the description, they are also the source of numerous problems, such as “index problems”, which make the solving of the equations very difficult.

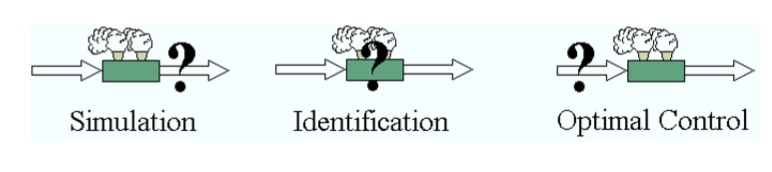

One of the major advantages of writing a model in DAE form as opposed to ODE form, is that a modeller does not have to perform a set of often cumbersome mathematical manipulations, such as substitution and symbolic differentiation. Dynamic process models can be divided into “low index” models (index 0 and 1) and “high index” models (index two and higher and some index one models). The differential index or index is a measure of the problems related to initialisation and integration of dynamic process models. The problems related to solve a dynamic process model increase with increasing index.

We will not go into details nor give a mathematically correct definition of the index of a model at this stage, but will just state that the index of a DAE is the number of times that all or part of a DAE must be differentiated (with respect to time) in order to get an ODE.

It is not recommend, by the way, to actually perform this series of differentiations as a general solution procedure for DAEs. Rather the number of such differentiation steps that would be required in theory turns out to be an important quantity in understanding the behaviour of numerical methods.

According to the definition an ODE (either explicit or implicit) has index zero. DAEs with index zero and one are generally much simpler to understand (and much simpler to solve) than DAEs with index two or higher.

With our modelling method we strive to produce semi-explicit index one models, since these can be easily used for simulation by any DAE-solver. Higher index models must either be simulated directly by a special integrator which tackles high index DAEs, or be transformed to semi-explicit index one and integrated. Two simple tests that guarantee structurally semi-explicit index one models are:

Our modelling method forces a model designer to be more aware of the assumptions he makes. Therefore, potential high index model formulations can be detected and/or avoided at an early stage of the model development. Knowing the causes of high index formulations helps the model designer in carefully considering the modelling purpose and the assumptions he wants to make.

Assumptions that impose direct or indirect constraints on the differential variables lead to high index models. But a modeller cannot simply impose some constraints on the differential variables. A constraint is always imposed by some “driving force” (i.e. a flow or reaction, since these are the only forces that appear in the differential equations), which forces the differential variables to adhere to the constraint. This means that instead of giving a description for the rate, a flow or reaction remains “unmodelled” and a (direct or indirect) constraint on the differential variables is given.

There can be several reasons why a modeller wants to introduce assumptions:

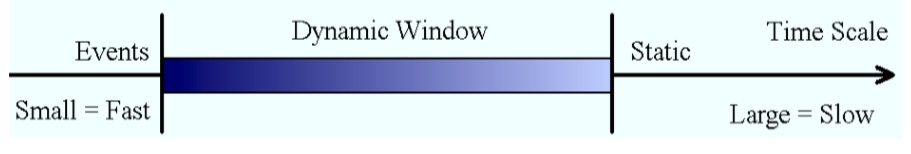

In our previous blog post the term time scale was introduced. Modelling systems in a range of time scales, the capacity terms are chosen accordingly but also the transport and the production terms. For parts being outside of the time scale in which the dynamics are being modelled, a pseudo steady-state assumption is made. For example, (very) fast reactions – fast in the measure of the considered range of time scale – are assumed to reach the equilibrium (for all practical purposes) instantaneously, and very slow ones do not appreciably occur and may be simply ignored.

In a next blog post we will zoom into “steady-state assumptions” and “events and discontinuities”.

What is your experience with constraints and assumptions? Did you ever run into computational problems without knowing what the cause was?

I invite to post your experiences, insights and/or suggestions in the comment box below, such that we can all learn something from it.

To your success!

Mathieu.

———————————————–

Constraints and assumptions describe all kinds of algebraic relationships between process quantities which have to hold at any time. A volume constraint, for example, restricts the volume of a simple system or the sum of volumes of a set of systems. Assumptions may result in models which are cumbersome to solve. Potential problems can very often be avoided at an early stage of the model development by keeping clear of certain assumptions or by directly dealing with the cause of the potential problems.

As discussed in the previous blog post one can distinguish between several types of assumptions: Structural assumptions (i.e. the construction of the physical topology), order of magnitude assumptions (very small versus very large) and assumptions on relative time scale (very slow versus very fast). These assumptions are usually introduced with a goal, namely to simplify the description of the behaviour by neglecting what is considered insignificant in the view of the application one has in mind for the model. Whilst indeed such assumptions do simplify the description, they are also the source of numerous problems, such as “index problems”, which make the solving of the equations very difficult.

High-index Models

Dynamic process models, as derived with our modelling methodology, consist of differential and algebraic equations (DAEs). Unfortunately, most engineers have little knowledge of the theory of DAEs, since most of the calculations that have to be performed during education are steady-state simulations. In the rare case that dynamics are considered, a mathematical description of the (usually very simple) model is derived in the form of ordinary differential equations (ODEs).One of the major advantages of writing a model in DAE form as opposed to ODE form, is that a modeller does not have to perform a set of often cumbersome mathematical manipulations, such as substitution and symbolic differentiation. Dynamic process models can be divided into “low index” models (index 0 and 1) and “high index” models (index two and higher and some index one models). The differential index or index is a measure of the problems related to initialisation and integration of dynamic process models. The problems related to solve a dynamic process model increase with increasing index.

We will not go into details nor give a mathematically correct definition of the index of a model at this stage, but will just state that the index of a DAE is the number of times that all or part of a DAE must be differentiated (with respect to time) in order to get an ODE.

It is not recommend, by the way, to actually perform this series of differentiations as a general solution procedure for DAEs. Rather the number of such differentiation steps that would be required in theory turns out to be an important quantity in understanding the behaviour of numerical methods.

According to the definition an ODE (either explicit or implicit) has index zero. DAEs with index zero and one are generally much simpler to understand (and much simpler to solve) than DAEs with index two or higher.

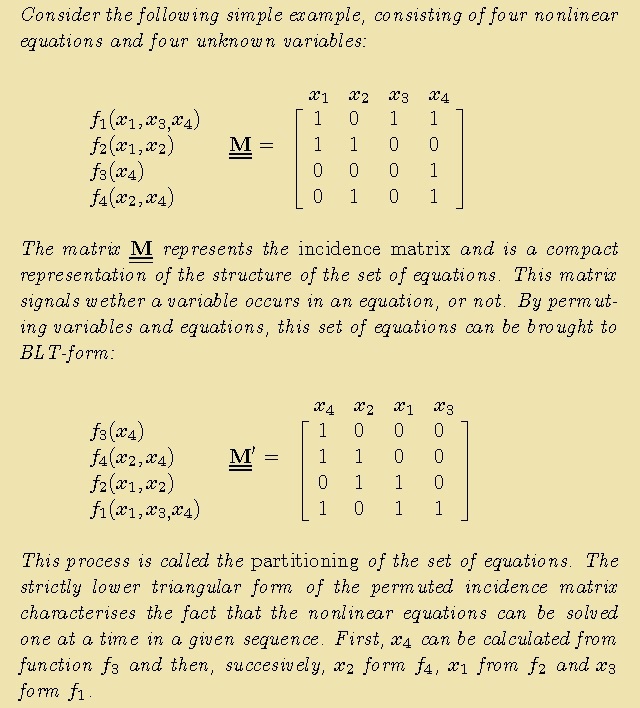

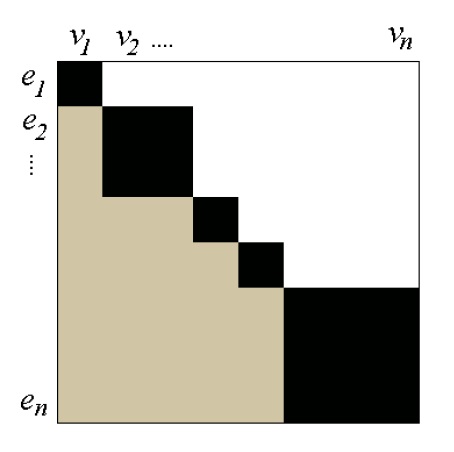

With our modelling method we strive to produce semi-explicit index one models, since these can be easily used for simulation by any DAE-solver. Higher index models must either be simulated directly by a special integrator which tackles high index DAEs, or be transformed to semi-explicit index one and integrated. Two simple tests that guarantee structurally semi-explicit index one models are:

- All algebraic variables must be present in the algebraic equations.

- It must be possible to assign each algebraic equation to an algebraic variable. Assignment of all equations and variables must be possible.

Assumptions Leading to High-index Models

Many high-index problems are caused by a model purpose that is not carefully considered, because the modeller does not want to include some of the rapid dynamics in the model, by assumptions that may not be essential or because the modeller wants to include certain variables in the model.Our modelling method forces a model designer to be more aware of the assumptions he makes. Therefore, potential high index model formulations can be detected and/or avoided at an early stage of the model development. Knowing the causes of high index formulations helps the model designer in carefully considering the modelling purpose and the assumptions he wants to make.

Assumptions that impose direct or indirect constraints on the differential variables lead to high index models. But a modeller cannot simply impose some constraints on the differential variables. A constraint is always imposed by some “driving force” (i.e. a flow or reaction, since these are the only forces that appear in the differential equations), which forces the differential variables to adhere to the constraint. This means that instead of giving a description for the rate, a flow or reaction remains “unmodelled” and a (direct or indirect) constraint on the differential variables is given.

There can be several reasons why a modeller wants to introduce assumptions:

- Only slow dynamics of the process are of interest. In this case the rapid dynamics can be neglected.

- Difficulties in finding reliable rate equations may force a modeller to make quasi steady-state assumptions.

- In order to perform model reduction, simplifying assumptions may be introduced.

In our previous blog post the term time scale was introduced. Modelling systems in a range of time scales, the capacity terms are chosen accordingly but also the transport and the production terms. For parts being outside of the time scale in which the dynamics are being modelled, a pseudo steady-state assumption is made. For example, (very) fast reactions – fast in the measure of the considered range of time scale – are assumed to reach the equilibrium (for all practical purposes) instantaneously, and very slow ones do not appreciably occur and may be simply ignored.

In a next blog post we will zoom into “steady-state assumptions” and “events and discontinuities”.

What is your experience with constraints and assumptions? Did you ever run into computational problems without knowing what the cause was?

I invite to post your experiences, insights and/or suggestions in the comment box below, such that we can all learn something from it.

To your success!

Mathieu.

———————————————–